Specular Lighting Nuances in the Phong Model

The Phong lighting model can produce some pretty realistic looking simulated light, considering its simplicity. To give a very brief summary, it includes 3 components: ambient, diffuse, and specular lighting. When combined, these components produce a believable lighting effect. The specifics of this model are described elsewhere on the internet, but I wanted to share some nuances that I encountered while implementing the specular component.

I wrote this post over a year ago, but I never got around to publishing it. It contains some of my learnings from when I was implementing my first lighting shader, which I think may be useful to others who are doing the same.

Using View Space

We always have the option to use whichever coordinate space is most convenient when performing any type of 3D computation. For example, world coordinates might be the best choice for computing the distance between two objects in a scene because the calculation is more intuitive. In lighting, I found that view space is the most natural choice.

What advantages does view space have over world space, with respect to lighting? When creating specular highlights, we need the angle between the light’s reflection on a surface and the camera’s position relative to that surface. In view space, the camera always represents \((0,0,0)\), so it is cheaper and simpler to find the direction vector from the camera to a fragment — it’s simply the coordinate of the fragment. If we were using world space, we would need to pass the camera’s position to the lighting shader.

To get accurate results, we need to ensure that all vectors are in the same vector space. Since we already know that the camera is at \((0,0,0)\), we just need to be sure that the model’s normal vectors and the light’s position are in view space as well. It’s easy to forget to transform the normals, which can cause some pretty funny visual bugs (the light behaves as if each face is facing in a different direction than it actually is). For example, here’s an abbreviated vertex shader that converts normals to view space.

void main()

{

// Use a transform matrix that includes perspective projection to compute

// each vertex's position

gl_Position = transformMatrix * vec4(_coord, 1.0);

// Use the view matrix to transform each fragment's position and the surface

// normal into view space. Notice we set the W component of the normal to

// 0.0 since it's a direction.

normal = vec3(viewMatrix * vec4(_normal, 0.0));

fragPos = vec3(viewMatrix * vec4(_coord, 1.0));

}

Specular Highlight Movement

When I first implemented Phong lighting, I had some questions about how camera rotation should affect the location of specular highlights. On one hand, if we think purely about the physics, the location of the camera, not the direction it is pointing, should determine the location of the highlight. However, from personal experience, the glare on a shiny surface does move as your head turns. So, should the camera’s direction factor into the location of a specular highlight? As the camera rotates, should the highlight move?

I found at least one other person on an online forum that had similar questions, but I couldn’t find any direct answers. The answer is simple - only the position, not the direction, of the camera is needed to calculate the position of the highlight. Why does turning your head change the position of a glare in real life? Your head swivels on your neck, which is behind your eyes by at least a few inches, so turning your head also changes the position of your eyes!

This isn’t exactly a groundbreaking realization. But, it does make me wonder if rotating the camera around an axis slightly behind the camera would give a more realistic feeling to a first person game.

Eliminating Reflection

Since specular highlights represent a light’s reflection from a surface into the camera, we need to measure how directly the light is reflected. The more directly the light is reflected into the camera, the brighter the highlight. Naively, we can reflect the light direction over the surface normal, then take the dot product between this reflection vector and the fragment’s position vector relative to the camera, like this:

vec3 lightDir = normalize(lightPos - fragPos);

vec3 reflectDir = reflect(-lightDir, normal);

vec3 viewDir = normalize(-fragPos);

float cosAngle = dot(viewDir, reflectDir);

However, reflect is a somewhat expensive operation. This article provides a pretty good explanation of how this function is implemented, but the gist is that it involves a dot product, a few multiplications, and one vector subtraction. Can we do better?

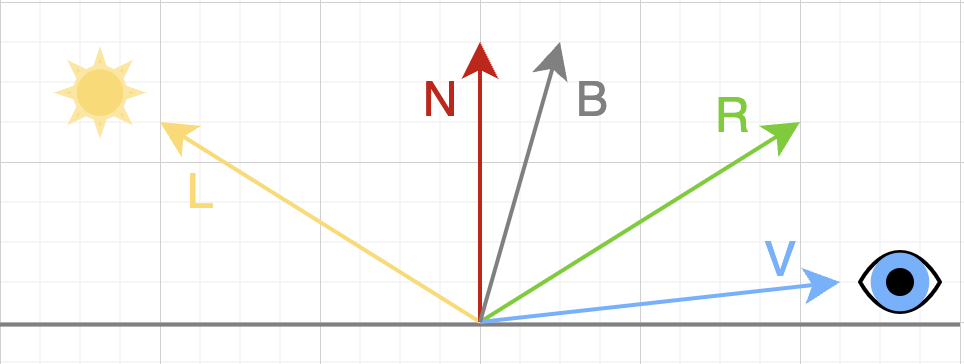

There is a clever alternative that avoids this reflection, described by the Blinn-Phong model. Instead of actually computing the reflection vector, we can take the bisection of the light direction and the direction from the fragment to the camera. Bisecting two vectors is as simple as taking their “average”. As the light’s reflection becomes more direct, the bisector will more closely align with the surface normal. This is easier to understand visually:

When implementing Blinn-Phong, we never actually compute R directly; it is only shown to demonstrate that as V approaches R, B approaches N. Notice the angle between V and R will always be twice the angle between N and B. Also, remember the dot product produces the cosine of an angle, not the angle itself. Therefore, the angle has a sinusoidal relationship with the dot product, and we can’t simply double the dot product compensate. For this reason, Blinn-Phong produces a slightly different visual effect.

A Blinn-Phong implementation looks like this:

vec3 lightDir = normalize(lightPos - fragPos);

vec3 viewDir = normalize(-fragPos);

vec3 bisector = normalize((lightDir + viewDir) / 2);

float cosAngle = dot(viewDir, reflectDir);

In the end, I’m not sure that this optimization really buys us anything, or whether Blinn-Phong should be seen as an optimization at all. If performance were my only goal, I would rely solely on benchmarks to decide between Blinn-Phong or Phong. However, in my opinion, Blinn-Phong produces more dramatic lighting because highlights appear elongated at shallow angles.

Blinn-Phong

Blinn-Phong

Phong

Phong

Preventing Impossible Specular Highlights

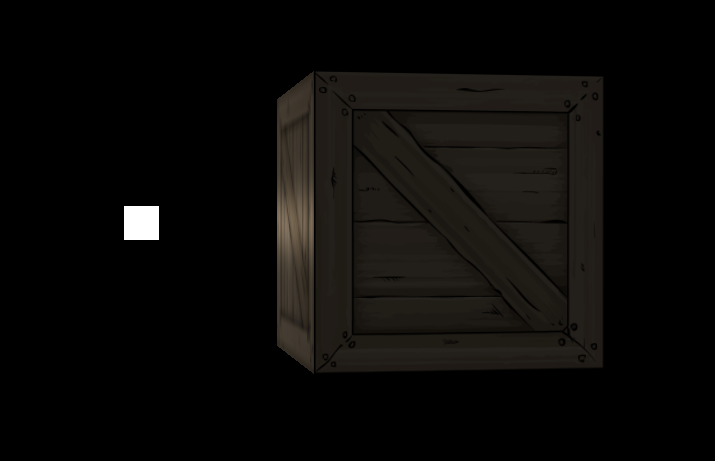

On my first pass through the Phong model, I noticed something weird. At shallow angles, specular highlights were appearing, even when the light source was behind the surface. To demonstrate, I reproduced this bug in my own engine. Notice that the top face of the crate lights up, even though the light source has fallen behind the crate.

Why does this happen? The specular highlight calculation only considers whether light should be reflected from a fragment into the camera based on the angles between them, but doesn’t care whether light is passing through a surface to get there. To fix this, we could choose to add an if statement to check that the dot product between the light and the surface normal is positive. However, there’s a better way.

As a minor concern, branching logic within shaders is said to be bad for performance because it can reduce parallelism in the GPU. According to this article, this is still true for modern graphics hardware, although it isn’t true that branching is always bad.

The more compelling argument for change is that we should have already computed this dot product when processing diffuse lighting! Therefore, to fix this bug, we can simply multiply the specular value by the diffuse value, which enforces that only surfaces that already have diffuse lighting can receive specular highlights. As a visual side effect, this means that specular highlights on surfaces that are receiving less diffuse light are not as prominent, which I think looks more realistic.